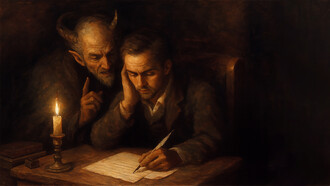

While the idea of being a writer has long been romanticized, the challenges these professionals face go far beyond the beautiful stereotype of a lonely author living in the mountains, drinking tea, and wearing stylized glasses. The whole notion of the “writer’s life” seems like a dream pursued by many, but the reality is a path of high competition, where very few make it to important publishers, have their work acknowledged by a wide audience, or capture truth and depth in society or fiction—all while making a living from it.

The rise of AI initially made it easier to accomplish certain goals, especially for students struggling with time management in college. With just a few prompts, you could have an assignment ready for submission. What at first looked like a tool that brought relief has now become a source of anxiety: many writers and students have had their work falsely accused of being AI-generated.

In early 2022, Jisna Raibagi, a 26-year-old student from India, said she had never used AI—only later realizing her peers in college already were. “I stressed out, trying to make the best of it. Writing always felt overwhelming; I don’t remember most of the things I wrote. When I learned people were using AI, I felt a little bit stupid.” While Jisna regrets not using AI to pass her classes, today, many students share tips on how to avoid having their assignments falsely flagged as AI-written. In just three years, ChatGPT and other tools have become shortcuts that some students rely on to meet academic demands—but the real problem comes when students who don’t use AI still see their work scored as zero because a detection tool claims it was generated.

In college, students have the right to appeal, respond to a panel, and discuss issues with lecturers and coordinators. But in professional environments, how should you respond? On Reddit, students share advice such as recording their screens or saving document histories as proof. Content writers, however, face the same accusations without the option of appealing to a professor.

Juliana, an actress, screenwriter, and content writer, said she was shocked when she received feedback from her editor that her 4,000-word essay had been classified as AI-generated. According to her, her frequent use of dashes might have triggered the false accusation. “I’ve worked in content and copywriting for 10 years, and I take my job very seriously. I had to change my writing style so it wouldn’t be flagged as AI-generated.” Juliana also worries about her career being damaged by these tools.

On LinkedIn, countless users complain about the same problem. While we don’t yet have reliable statistics on how professionals are affected, it’s clear they are aware of being wrongfully accused. Senior content writer Himani Aggarwal posted:

First, it was plagiarism; now it’s AI detectors. I could spend hours pouring my thoughts into a blog, shaping it with my voice, my experiences, and my stories. And yet—one scan later, I’m told, ‘This looks AI-generated.’ Why? Because I dared to start a sentence with ‘Un-.’ Because I used transitions like ‘Moreover’ or ‘Hence.’ Because my style doesn’t look ‘human enough’ to a machine. It feels like writers are always on trial. Yesterday, it was ‘prove you didn’t copy another writer.’ Today, it’s ‘prove you didn’t copy a machine.’

Turnitin is among the most widely adopted tools in the U.S. to detect AI-generated text. While the company concedes on its website that the software is not perfectly accurate—reporting a false-flag rate of under 1%—the question remains: how many voices, ideas, or careers can be harmed by even that small margin of error?

Other detection tools also used in academic settings include GPTZero, Copyleaks, and PlagiarismCheck.org, all of which attempt to analyze texts for signs of AI generation through metrics like “predictability,” writing style, or similarity to AI-training material.

While many people claim it is easy to recognize an AI-generated text—pointing out that some people use AI to write comments on social media—it is important to observe how this becomes a real challenge in the workplace. The real world cannot operate under the same assumptions as a sci-fi movie or novel, because this forces us to ask: how do we humanize writing, and how do we recognize what is truly human-made?

There is also a paradox worth noting: companies do not want AI-generated texts, yet they rely on AI tools to determine a writer’s honesty. In other words, the intriguing point is this: AI-generated text is often assumed to be suspicious, false, or poorly written—but once the software “decides” a text is human, it is automatically considered safe and trustworthy.

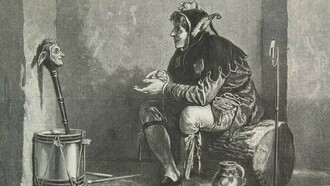

It almost feels like Blade Runner in some ways. In the movie, there is the Voight-Kampff test, a machine that decides who is human and who is not. The whole tension of the film is that sometimes the test is wrong, or at least incomplete, because the replicants start to show emotions, memories, and empathy, which are supposed to be human qualities. In our reality, it is not about robots but about texts, and AI detectors like Turnitin are playing the same role. They are saying, "This is human; this is not." But the paradox is there, because just like in Blade Runner, the tool that is supposed to protect us from machines can end up accusing the human of being fake—at least in the film it fails to detect replicants, not the other way around.

An interesting comment made by a student on Reddit was that one of their strategies to remain “human” was to intentionally leave grammar mistakes in their essays. It made me think: maybe written language is evolving more like the way we speak—informal, flexible, and constantly changing. AI tools might catch patterns for now, but they can never fully keep up, because language itself moves too fast. Slang, style, syntax—it all shifts, and humans adapt naturally. Over time, writing may become more universal and less strictly academic, reflecting how we really communicate.

The irony is striking: the very tools meant to protect authenticity are chasing a moving target, just as the Voight-Kampff test in Blade Runner struggles to decide what is truly human. And just like the replicants, writers will always find ways to assert their humanity—even through tiny, imperfect “errors” that no machine can replicate perfectly.